Cursor is an AI-enhanced code editor. You click and an LLM auto-completes your code! It’s the platform of choice for “vibe coding,” where you get the AI to write your whole app for you. This has obvious and hilarious failure modes.

On Monday, Cursor started forcibly logging out users if they were logged in from multiple machines. Users contacted support, who said this was now expected behaviour: [Reddit, archive]

Cursor is designed to work with one device per subscription as a core security feature. To use Cursor on both your work and home machines, you’ll need a separate subscription for each device.

The users were outraged at being sandbagged like this. A Reddit thread was quickly removed by the moderators — who are employees of Cursor. [Reddit, archive, archive]

Cursor co-founder Michael Truell explained how this was all a mistake and Cursor had no such policy: [Reddit]

Unfortunately, this is an incorrect response from a front-line AI support bot.

Cursor support was an LLM! The bot answered with something shaped like a support response! It hallucinated a policy that didn’t exist!

Cursor’s outraged customers will forget all this by next week. It’s an app for people who somehow got a developer job but have no idea what they’re doing. They pay $8 million each month so a bot will code for them. [Bloomberg, archive]

Cursor exists to bag venture funding while the bagging is good — $175 million so far, with more on the way. None of this ever had to work. [Bloomberg, archive]

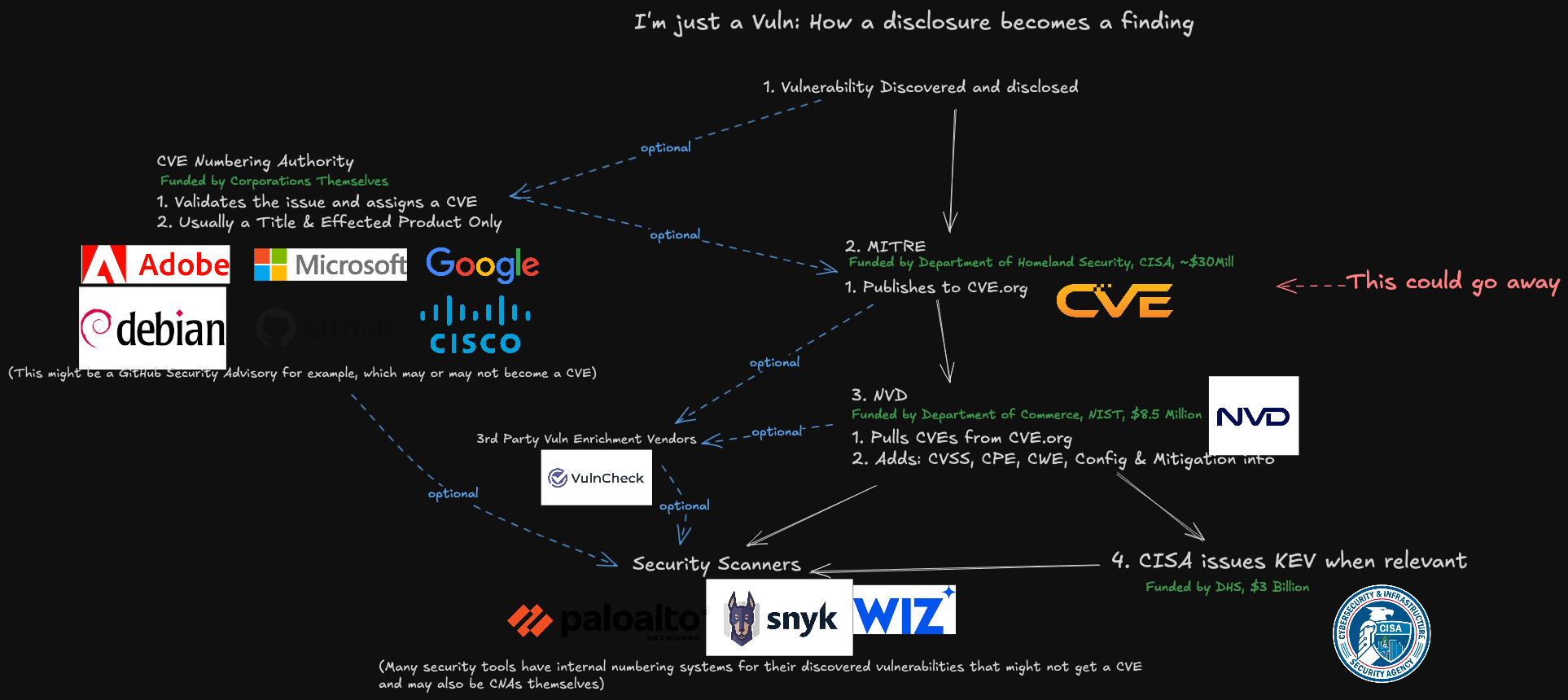

A critical resource that cybersecurity professionals worldwide rely on to identify, mitigate and fix security vulnerabilities in software and hardware is in danger of breaking down. The federally funded, non-profit research and development organization MITRE warned today that its contract to maintain the Common Vulnerabilities and Exposures (CVE) program — which is traditionally funded each year by the Department of Homeland Security — expires on April 16.

A letter from MITRE vice president Yosry Barsoum, warning that the funding for the CVE program will expire on April 16, 2025.

Tens of thousands of security flaws in software are found and reported every year, and these vulnerabilities are eventually assigned their own unique CVE tracking number (e.g. CVE-2024-43573, which is a Microsoft Windows bug that Redmond patched last year).

There are hundreds of organizations — known as CVE Numbering Authorities (CNAs) — that are authorized by MITRE to bestow these CVE numbers on newly reported flaws. Many of these CNAs are country and government-specific, or tied to individual software vendors or vulnerability disclosure platforms (a.k.a. bug bounty programs).

Put simply, MITRE is a critical, widely-used resource for centralizing and standardizing information on software vulnerabilities. That means the pipeline of information it supplies is plugged into an array of cybersecurity tools and services that help organizations identify and patch security holes — ideally before malware or malcontents can wriggle through them.

“What the CVE lists really provide is a standardized way to describe the severity of that defect, and a centralized repository listing which versions of which products are defective and need to be updated,” said Matt Tait, chief operating officer of Corellium, a cybersecurity firm that sells phone-virtualization software for finding security flaws.

In a letter sent today to the CVE board, MITRE Vice President Yosry Barsoum warned that on April 16, 2025, “the current contracting pathway for MITRE to develop, operate and modernize CVE and several other related programs will expire.”

“If a break in service were to occur, we anticipate multiple impacts to CVE, including deterioration of national vulnerability databases and advisories, tool vendors, incident response operations, and all manner of critical infrastructure,” Barsoum wrote.

MITRE told KrebsOnSecurity the CVE website listing vulnerabilities will remain up after the funding expires, but that new CVEs won’t be added after April 16.

A representation of how a vulnerability becomes a CVE, and how that information is consumed. Image: James Berthoty, Latio Tech, via LinkedIn.

DHS officials did not immediately respond to a request for comment. The program is funded through DHS’s Cybersecurity & Infrastructure Security Agency (CISA), which is currently facing deep budget and staffing cuts by the Trump administration.

Former CISA Director Jen Easterly said the CVE program is a bit like the Dewey Decimal System, but for cybersecurity.

“It’s the global catalog that helps everyone—security teams, software vendors, researchers, governments—organize and talk about vulnerabilities using the same reference system,” Easterly said in a post on LinkedIn. “Without it, everyone is using a different catalog or no catalog at all, no one knows if they’re talking about the same problem, defenders waste precious time figuring out what’s wrong, and worst of all, threat actors take advantage of the confusion.”

John Hammond, principal security researcher at the managed security firm Huntress, told Reuters he swore out loud when he heard the news that CVE’s funding was in jeopardy, and that losing the CVE program would be like losing “the language and lingo we used to address problems in cybersecurity.”

“I really can’t help but think this is just going to hurt,” said Hammond, who posted a Youtube video to vent about the situation and alert others.

Several people close to the matter told KrebsOnSecurity this is not the first time the CVE program’s budget has been left in funding limbo until the last minute. Barsoum’s letter, which was apparently leaked, sounded a hopeful note, saying the government is making “considerable efforts to continue MITRE’s role in support of the program.”

Tait said that without the CVE program, risk managers inside companies would need to continuously monitor many other places for information about new vulnerabilities that may jeopardize the security of their IT networks. Meaning, it may become more common that software updates get mis-prioritized, with companies having hackable software deployed for longer than they otherwise would, he said.

“Hopefully they will resolve this, but otherwise the list will rapidly fall out of date and stop being useful,” he said.

What do you call it when an economic bubble stops growing?

In February, stock analysts TD Cowen spotted that Microsoft had cancelled leases for new data centres — 200 megawatts in the US, and one gigawatt of planned leases around the world.

Microsoft denied everything. But TD Cowen kept investigating and found another two gigawatts of cancelled leases in the US and Europe. [Bloomberg, archive]

Bloomberg has now confirmed that Microsoft has halted new data centres in Indonesia, the UK, Australia and the US. [Bloomberg, archive]

The Cambridge, UK site was specifically designed to host Nvidia GPU clusters. Microsoft also pulled out of the new Docklands Data Centre in Canary Wharf, London.

In Wisconsin, US, Microsoft had already spent $262 million on construction — but then just pulled the plug.

Mustafa Suleyman of Microsoft told CNBC that instead of being “the absolute frontier,” Microsoft now prefers AI models that are “three to six months behind.” [CNBC]

Google has taken up some of Microsoft’s abandoned deals in Europe. OpenAI took over Microsoft’s contract with CoreWeave. [Reuters]

But bubbles run on the promise of future growth. That’s looking shaky.

Joe Tsai of Chinese retail and IT giant Alibaba warned that AI data centres might be looking like a bubble! You know, like the data centre bubble that had already been happening for some time in China. [Bloomberg, archive]

It’s pretty much the answer to a trivia question at this point, but there was once a version of VHS that looked better than DVDs. Really.

“I was amazed. Visually D-Theater is not just an improvement over DVD. It leaves DVD in the dust, as difficult as that might be for DVD’s growing legion of fans to visualize.”

— Mike Snider, a writer for USA Today, reviewing the D-VHS format in 2002. At the time of the review, the D-VHS format was capable of delivering 1080i-quality video at a time when 480p was the norm in DVD-land. For a couple of years, it was the highest-quality consumer video format in the land.

/uploads/DVHS_example1.jpg)

Why there should have been a market for D-VHS in the late 1990s

I don’t think it was necessarily a given that we were going to switch to discs. Sure, it became obvious by the turn of the 21st century that DVDs were going to be the film format du jour, holding on even better than Blu-Rays did.

Part of that was inertia. We were already comfortable with DVDs, so why upgrade, even with all the technical advantages that a higher-resolution format had to offer? If you look at the data, Blu-Rays never even came close to topping the DVD market—per CNBC, the peak year for Blu-Rays in the U.S. came in 2013, and was roughly one-seventh of the DVD’s peak year.

In other words, the DVD was more versatile than we gave it credit for, and that helped with its staying power. Perhaps the problem with the Blu-Ray was that it wasn’t different enough—which meant, while it was successful, it was no match for the streaming revolution.

To me, that is the best surface-level explanation why the D-VHS never took off, despite arguably being better than the DVD at all the things people say they care about, like video quality. With D-VHS, VHS format put up a legitimate fight, and it arguably did better than anyone might give it credit for today. But it wasn’t a reinvention, and I think consumers were ready for one.

The one knock against disc-based formats was the very knock D-VHS was well-positioned to knock out. It was able to record video at a high quality. On top of that, it was actually better than DVD at high-definition video, and in its highest-end format, could store as much data as a dual-layer Blu-Ray.

And on top of all that, it was backwards-compatible, meaning that if you had a large collection of VHS tapes already in your library, you could still use them with just one device, limiting entertainment center clutter.

To be clear, this wasn’t JVC’s first go-around with a higher-resolution take on videotape. The company’s W-VHS, released in Japan in 1993, was the first consumer video format capable of displaying images in 1080i, easily the highest resolution available to traditional consumers. But that was still analog. D-VHS was digital, and digital was ambitious.

But when it launched, it certainly felt like an uphill battle. As Popular Mechanics noted in 1998 in an article titled “For Videophiles Only,” it actually came to the market before all the HDTV signals did:

The first digital products included computers and compact disc players. Within the last few years, digital camcorders, DSS (digital satellite systems), and DVD (digital video discs) have burst onto the electronics scene. Next year will bring digital television and high-definition television (HDTV) programming to market, now that the FCC has given final approval of channel allocation to the 1600 or so television stations across the country.

But you don’t have to wait until next year to enjoy the incredible clarity and stunning definition of digital video. You don’t have to wait a year or more to turn on your television and enjoy images totally free of distortion, snow, interference, or picture noise. Trouble is, no television station will be generating these great video images for you in the near future. You’ll have to generate them yourself—from a digital videocassette recorder.

That’s right: At first, its most prominent feature was useless to the average person.

But even if you weren’t recording in digital, D-VHS had the advantage of being a format that could go on for miles. It was possible to record a day and a half of programming on a single tape in its lowest-quality mode—without having to change the player. Plus, for people who wanted to record digital signals from their computer, D-VHS allowed you to do so with another then-emerging technology: Firewire.

Put another way, this was a dream machine for people committed to recording stuff for hours and hours on end, who wanted better quality than you could get out of a standard analog tape.

Some of these people would go to great lengths to get more out of these players. A common hack during the early 2000s was to modify either the tapes or the players, so they could use S-VHS tapes to record in D-VHS players. Because this was a format for nerds, it meant they were willing to go above and beyond to save a little money. Some of those nerds determined that blank D-VHS tapes only differed from S-VHS models because of the placement of a plastic hole.

As one AVS Forum commenter put it in 2003: “Whatever the tape and DVCR manufacturers say, I am convinced this hole is the only difference in the tapes.”

Was the quality of D-VHS good enough to validate this kind of trickery? Let’s go to the tape. The YouTube video archivist ENunn has uploaded dozens of videos of D-VHS captures onto his various YouTube channels, and they feature some of the best quality you’ve probably ever seen when it comes to re-uploaded commercials from 20+ years ago. The above clip, from 2003, would be nothing special if it originated from a PC. But pulled off videotape? It’s nothing short of spectacular.

And it’s all the more impressive in higher resolution, as this 2007 clip from a PBS broadcast was. I don’t think regular people necessarily wanted something like this—we were fine with our recorded-over videotapes, thank you very much—but if you were a video nerd or amateur archivist, this kind of quality was hard to top.

Someone had to think ahead and grab all this stuff when it was originally on the air, and it’s honestly impressive to look at in retrospect. The problem was, few people invested in this technology. And you might be wondering why.

Sponsored By TLDR

Want a byte-sized version of Hacker News? Try TLDR’s free daily newsletter.

TLDR covers the most interesting tech, science, and coding news in just 5 minutes.

No sports, politics, or weather.

2004

The year that the Federal Communications Commission created a requirement for cable providers to offer FireWire to customers who wanted it. This was sold as a benefit largely for D-VHS owners, who could record direct digital signals from their cable boxes onto high-quality tapes with zero compression. In reality, it also turned out to be a perk for computer owners, who could turn their computers into makeshift DVRs—though this use case didn’t last, because many cable providers scrambled their broadcasts. AnandTech has one such example of this in action, involving a Mac Mini.

/uploads/d-theater-player.jpg)

Don’t make me think: The reasons D-VHS didn’t catch on feel simple in retrospect

For the past three decades, a specific dynamic has played out in content distribution: When it comes to physical media, less digital rights management is better. It’s a complicating factor, and makes it harder to use the devices we paid for by creating arbitrary limits.

Many turn-of-the-century disc-based formats, such as Super Audio CD, had restrictive copy protection, put in at the behest of content companies. These formats cropped up everywhere for a while. But they forced hardware manufacturers to lead with consumer-unfriendly messaging and confusing feature sets, and that was their downfall. Consumers immediately realized that digital formats like MP3s were far easier to use, and just ignored the format war entirely.

And that, in many ways, is the story of D-VHS. The complicated rules around the format’s digital rights management meant recording digital video, or even trying to choose the right player, was complex and time-consuming.

It’s largely forgotten today, but DVD players succeeded partly because of the quick demise of its DRM scheme, the Content Scrambling System. The process, called DeCSS, created legal headaches for years, and one that arguably gave birth to modern-day piracy. But it also made DVDs the go-to medium for physical film distribution in the computer era.

D-VHS, meanwhile, was one of the few ways to capture encrypted digital video without converting it to analog first. That meant, if you wanted to capture the live feed of a satellite signal, you had to use one of these machines. Making things worse: The video was difficult to convert to another format from that point because of content protection. It used High Definition Copy Protection (HDCP), the same copy-protection tech used by HDTVs, as well as a key part of the ubiquitous HDMI cable format.

Plus, the sheer size of the content was initially believed to limit any potential piracy concerns, as a piece in Wired suggested in 2001:

JVC introduced the new D-VHS tape at the Consumer Electronics Show (CES) along with a high definition television (HDTV) set that protects high definition content from being copied. Video on D-VHS tapes is uncompressed, so it’s enormous. A 75GB hard disk would only hold around 30 minutes of the video, according to company officials, making the trading of HD content over the Internet impossible.

(To which I say, LOL, sure Jan. Someone didn’t consider that video compression was about to become an arms race.)

The format, which initially didn’t rely on pre-recorded media, eventually got its own D-Theater releases—which were the best you could do with an HDTV without using a set-top box or a digital tuner.

But even with the growing interest in theater-quality video, some studios were looking at D-Theater and thinking to themselves, “Wait, doesn’t this just undermine what we’re doing with DVDs?” That led some home video distributors, like Warner Home Entertainment and the Sony-backed Columbia TriStar Home Entertainment, to ignore the market entirely. The latter’s then-president, Ben Feingold, suggested tape-based mediums were old hat.

“As far as we’re concerned, D-VHS is not a commercial product,” Feingold told Variety in 2002. “The enormous success of DVD leads us to believe, both intuitively and practically, that there’s a strong preference for a disc-based product.”

At the same time, though, you can clearly see the potential. This D-Theater demo tape, also captured by the aforementioned ENunn, looks pretty mind-blowing even now, despite the graphics looking somewhat dated. You can definitely feel the oomph of the video format in a way that even DVDs didn’t quite capture at the time.

Ironically, D-Theater created a flip of the situation that existed in the home video industry just a decade earlier: In the ’90s, the videophile format was LaserDisc and the consumer format was VHS. Now, D-Theater was trying to take over the LaserDisc market, while DVD was the VHS-like format of its time.

But D-VHS had many problems: Because it wasn’t a random-seek format, it didn’t come with the myriad of extra features you could get on a DVD or LaserDisc. For most of its history, it didn’t even support additional audio tracks. Given the importance of audio commentary as a selling point for movies and TV shows at retail, it sure feels like a missed opportunity.

Then there were compatibility issues that were pretty much of the manufacturer’s making. Despite JVC and Mitsubishi each making D-VHS players, the devices were often quite different, with wildly diverging feature sets that require you to have a ton of components before you can even get going. One review I found, dating to 2002, put it like this:

If you’re familiar with a regular ol’ VHS VCR, as almost everyone is by now, you’ll understand both the Mitsubishi HS-HD2000U and JVC HM-DH30000U right away. Both have silver faceplates and standard VCR controls on their front panels. Both come with mammoth remotes; the Mitsubishi remote has a small display at its top that tells you what you’re doing. There’s nothing about their ability to record HDTV that changes their basic VCR functions.

But there’s one big difference between these decks: The Mitsubishi HS-HD2000U costs $1049, the JVC HM-DH30000U $2000. Why? The JVC is equipped with an expensive MPEG encoder/decoder. The encoder can upconvert analog signals to digital so the unit can function as a digital archiver. The decoder provides for the JVC’s HD component analog output.

In addition, the JVC is equipped to play back prerecorded high-definition movies recorded using JVC’s new, proprietary D-Theater format, which includes robust copy protection. Last year, JVC quietly won agreement from the Motion Picture Association of America to market prerecorded movies protected with D-Theater. That infuriated Mitsubishi, which, like the rest of industry, regards VHS as an open standard, meaning that any tape playable on one VHS machine should be playable on all. Nonetheless, JVC won agreement from Fox, Universal, DreamWorks, and Artisan to begin releasing D-VHS, HD movies. The studios have announced that the first films to be released in this format will be Independence Day, Die Hard, X-Men, U-571, and the two Terminator films. As of press time, none were yet available, nor had pricing been established. But to play them, you’ll have to spend almost $1000 more and buy the JVC VCR. (JVC says a less expensive version will come out soon.)

Say what you will about DVD players, but they generally worked the same between iterations. A $200 player and a $2,000 player ultimately played the same movies. But JVC’s bet on DRM to win over the film studios saddled the format with complex cruft on top of the already complex cruft the format itself created.

And then there are more practical considerations: Netflix essentially disrupted traditional video rentals thanks largely to the mechanics of the postal system. Discs were cheap to ship; tapes, not as much. That obviously put D-VHS at a disadvantage from a rental standpoint.

DRM prevented unauthorized copying, but also added comical complexity to these tools. Hell, even figuring out how to pirate movies with BitTorrent was easier than working your way through the myriad options that D-VHS offered. Compared to formats that relied on hard drives or discs, this was just an unseemly mess. Given all that, it’s not really surprising that, when Blu-Ray hit the market in 2006, D-VHS was already something of a footnote as an entertainment format.

In retrospect, D-VHS was an enthusiast format that just couldn’t get it together.

“We have two trucks that we own. We built them and we own them. They were specially built. All of the equipment was specially designed. We’ve got our own server system. We’ve got integrated backup to D-VHS and HDCam. We’ve got duplicated systems internally so we won’t have a break down.”

— Mark Cuban, in a 2002 interview with Post Magazine about the creation of HDNet, his high-resolution cable channel, which aired programming in 1080i at a time when that was fairly uncommon. It’s forgotten now, but before he became a sports franchise owner and Shark Tank regular, he gained his fortune on streaming video. After selling Broadcast.com to Yahoo for billions of dollars, he created HDNet, which leaned hard into high-resolution video, often utilizing D-VHS tape to display on his 102-inch TV screen. “The hi-def screen spoils you,” Cuban told Wired that same year. “I can’t watch regular TV anymore. It just isn’t worth the effort.” The network exists today as AXS TV, which Cuban still maintains a stake in.

These days, content on VHS tapes can be found for cheap, reflecting the format’s one-time ubiquity. You can find them at any thrift store for pennies on the dollar, often of varying quality.

/uploads/drtwomendtheater.jpg)

But D-VHS remains a frustratingly expensive format to collect for. One look at eBay shows that 1080i-quality D-Theater videos sell for more than $50 a pop—despite the films themselves not exactly being obscurities. A $99 copy of Dr. T & The Women, a film that sells on Amazon for less than $7 in DVD format and $3 VHS format—and is freely available on Amazon Prime—just feels like a slap in the face. In many ways, when a film is that expensive just because of its format, it’s pretty much of its obscurity or technical aspects, rather than its quality.

(That’s especially true given that used players go for about $200 nowadays, with a premium on D-VHS devices that support D-Theater.)

To me, the most interesting part of D-VHS is that it technically still has value. If you want to record a digital video feed and not lose fidelity, it works—though DRM challenges and hardware complexities mean you might be better off using a DVR on your home server.

D-VHS represented a home theater fanatic’s greatest desire, a format that, in its time, worked better than anything else out there. But whether it was because it was on the bleeding edge, or because the underlying DRM girding the players, manufacturers forgot that regular people use this stuff, too. It left them in the dust in a way that regular VHS never did. Of course it failed.

Not to say Blu-Ray was the greatest format ever, but at least Sony was smart enough to shove it in a device the average person could understand, rather than making it so obtuse that nobody could figure it out.

There just aren’t that many people who want to record HDTV-quality commercials in 1080i.

--

Find this one an interesting read? Share it with a pal! And back at it in a couple of days.