🕹️ Decker is, in my opinion, the best way to get started at any skill level.

Think of it like the MS Paint of game development. Easy to pick up and always fun to use.

🕹️ Decker is, in my opinion, the best way to get started at any skill level.

Think of it like the MS Paint of game development. Easy to pick up and always fun to use.

Last Friday’s newsletter begins:

My favorite thing about reading “the classics” is that they’re almost always weirder than you think they are. For example: within 50 pages of War and Peace, a bunch of drunks tie a policeman to a bear and throw them in the river. (I try to read a big book every summer, so I figured why not read one of the biggest?)

With backup from Italo Calvino’s Why Read The Classics:

“Classics are books which, the more we think we know them through hearsay, the more original, unexpected, and innovative we find them when we actually read them…”

Read more: “The classics are weird.”

A couple of months ago, I wrote:

[...] It may become impossible to launch a new programming language. No corpus of training data in the coding AI assistant; new developers don't want to use it because their assistant can't offer help; no critical mass of new users; language dies on the vine. --@zarfeblong, March 28

I was replying to a comment by Charlie Stross, who noted that LLMs are trained on existing data and therefore are biased against recognizing new phenomena. My point was that in tech, we look forward to learning about new inventions -- new phenomena by definition. Are AI coding tools going to roadblock that?

Already happening! Here's Kyle Hughes last week:

At work I’m developing a new iOS app on a small team alongside a small Android team doing the same. We are getting lapped to an unfathomable degree because of how productive they are with Kotlin, Compose, and Cursor. They are able to support all the way back to Android 10 (2019) with the latest features; we are targeting iOS 16 (2022) and have to make huge sacrifices (e.g Observable, parameter packs in generics on types). Swift 6 makes a mockery of LLMs. It is almost untenable.

[...] To be clear, I’m not part of the Anti Swift 6 brigade, nor aligned with the Swift Is Getting Too Complicated party. I can embed my intent into the code I write more than ever and I look forward to it becoming even more expressive.

I am just struck by the unfortunate timing with the rise of LLMs. There has never been a worse time in the history of computers to launch, and require, fundamental and sweeping changes to languages and frameworks. --@kyle, June 1 (thread)

That's not even a new language, it's just a new major version. Is C++26 going to run into the same problem?

Hat tip to John Gruber, who quotes more dev comments as we swing into WWDC week.

Speaking of WWDC, the new "liquid glass" UI is now announced. (Screenshots everywhere.) I like it, although I haven't installed the betas to play with it myself.

Joseph Humphrey has, and he notes that existing app icons are being glassified by default:

Kinda shocked to see these 3rd party app icons having been liquid-glassed already. Is this some kind of automatic filter, or did Apple & 3rd parties prep them in advance?? --@joethephish, June 10 (thread)

The icon auto-glassification uses non-obvious heuristics, and Joe's screenshots show some weird artifacts.

I was surprised too! For the iOS7 "flatten it all" UI transition, existing apps did not get the new look -- either in their icons or their internal buttons, etc -- until the developer recompiled with the new SDK. (And thus had a chance to redesign their icons for the new style.) As I wrote a couple of months ago:

[In 2012] Apple put in a lot of work to ensure that OS upgrades didn't break apps for users. Not even visually. (It goes without saying that Apple considers visual design part of an app's functionality.) The toolkit continued to support old APIs, and it also secretly retained the old UI style for every widget.

-- me, April 9

Are they really going to bag that policy for this fall? I guess they already sort of did. Last year's "tint mode" squashed existing icons to tinted monochrome whether they liked it or not. But that was a user option, and not a very popular one, I suspect.

This year's icon change feels like a bigger rug-pull for developers. And developers have raw nerves these days.

This is supposed to be a prediction post. I guess I'll predict that Apple rolls this back, leaving old (third-party) icons alone for the iOS26 full release. Maybe.

(I see Marco Arment is doing a day of "it's a beta, calm down and send feedback". Listen to him, he knows his stuff.)

But the big lurking announcement was iPadOS gaining windows, a menu bar, and a more (though not completely) file-oriented environment. A lot of people have been waiting years for those features. Craig Federighi presented the news with an understated but real wince of apology.

Personally, not my thing. I don't tend to use my iPad for productive work. And it's not for want of windows and a menu bar; it's for want of a keyboard and a terminal window. I have a very terminal-centric work life. My current Mac desktop has nine terminal windows, two of which are running Emacs.

(No, I don't want to carry around an external keyboard for my iPad. If I carry another big thing, it'll be the MacBook, and then the problem is solved.)

But -- look. For more than a decade, people have been predicting that Apple would kill MacOS and force Macs to run some form of iOS. They predicted it when Apple launched Gatekeeper, they predicted it when Apple brought SwiftUI apps to MacOS, they predicted it when Apple redesigned the Settings app.

I never bought it before. Watching this week's keynote, I buy it. Now there is room for i(Pad)OS to replace MacOS.

Changing or locking down MacOS is a weak signal because people use MacOS. You can only do so much to it. Apple has been tightening the bolts on Gatekeeper at regular intervals, but you can still run unsigned apps on a Mac. The hoops still exist. You can install Linux packages with Homebrew.

But adding features to iPad is a different play! That's pushing the iPad UI in a direction where it could plausibly take over the desktop-OS role. And this direction isn't new, it's a well-established thing. The iPad has been acquiring keyboard/mouse features for years now.

So is Apple planning to eliminate MacOS entirely, and ship Macs with (more or less) iPadOS installed? Maybe! This is all finger-in-the-wind. I doubt it's happening soon. It may never happen. It could be that Apple wants iPad to stand on its own as a serious mobile productivity platform, as good as the Mac but separate from it.

But Apple thinks in terms of company strategy, not separate siloed platforms. And, as many people have pointed out, supporting two similar-but-separate OSes is a terrible business case. Surely Apple has better uses for that redundant budget line.

Abstractly, they could unify the two OSes rather than killing one of them. But, in practice, they would kill MacOS. Look at yesterday's announcements. iPad gets the new features; Mac gets nothing. (Except the universal shiny glass layer.) The writing is not on the wall but the wind is blowing, and we can see which way.

Say this happens, in 2028 or whenever. (If Apple still exists, if I haven't died in the food riots, etc etc.) Can my terminal-centric lifestyle make its way to an iPad-like world?

...Well, that depends on whether they add a terminal app, doesn't it? Fundamentally I don't care about MacOS as a brand. I just want to set up my home directory and my .emacs file and install Python and git and npm and all the other stuff that my habits have accumulated. You have no idea how many little Python scripts are involved in everyday tasks like, you know, writing this blog post.

(Okay, you do know that because my blogging tool is up on Github. The answer is four. Four vonderful Python scripts, ah ah ah!)

If I can't do all that in MacOS 28/29/whichever, it'll be time to pick a Linux distro. Not looking forward to that, honestly. (I fly Linux servers all the time, but the last time I used a Linux desktop environment it was GNOME 1.0? I think?)

Other notes from WWDC. (Not really predictions, sorry, I am failing my post title.)

Tim Cook looks tired. I don't mean that in a Harriet Jones way! I assume he's run himself ragged trying to manage political crap. Craig Federighi is still having fun but I felt like he was over-playing it a lot of the time. Doesn't feel like a happy company. Eh, what do I know, I'm trying to read tea leaves from a scripted video.

I said "Mac gets nothing" but that's unfair. The Spotlight update with integrated actions and shortcuts looks extremely sexy. Yes, this is about getting third-party devs to support App Intents so that Siri/AI can hook into them. But it will also be great for Automator and other non-AI scripting tools.

WWDC is a software event; Apple never talks about new hardware there. I know it. You know it. But it sure was weird to have a whole VisionOS segment pushing new features when the rev1 Vision Pro is at a dead standstill. My sense is that the whole ecosystem is on hold waiting for a consumer-viable rev2 model.

I think the consumer-viable rev2 model is coming this fall. There, that's a prediction. Worth what you paid for it.

I'm enjoying the Murderbot show but damn if Gurathin isn't a low-key Vision Pro ad. He's got the offhand tap-fingers gesture right there.

I'm excited about the liquid glass UI. I want to play with it. Fun is fun, dammit.

I hate redesigning app icons for a new UI. Oh, well, I'll manage. (EDIT-ADD: Turns out Apple is pushing a new single-source process which generates all icon sizes and modes. Okay! Good news there.)

My input stream is full of it: Fear and loathing and cheerleading and prognosticating on what generative AI means and whether it’s Good or Bad and what we should be doing. All the channels: Blogs and peer-reviewed papers and social-media posts and business-news stories. So there’s lots of AI angst out there, but this is mine. I think the following is a bit unique because it focuses on cost, working backward from there. As for the genAI tech itself, I guess I’m a moderate; there is a there there, it’s not all slop. But first…

I promise I’ll talk about genAI applications but let’s start with money. Lots of money, big numbers! For example, venture-cap startup money pouring into AI, which as of now apparently adds up to $306 billion. And that’s just startups; Among the giants, Google alone apparently plans $75B in capital expenditure on AI infrastructure, and they represent maybe a quarter at most of cloud capex. You think those are big numbers? McKinsey offers The cost of compute: A $7 trillion race to scale data centers.

Obviously, lots of people are wondering when and where the revenue will be to pay for it all. There’s one thing we know for sure: The pro-genAI voices are fueled by hundreds of billions of dollars worth of fear and desire; fear that it’ll never pay off and desire for a piece of the money. Can you begin to imagine the pressure for revenue that investors and executives and middle managers are under?

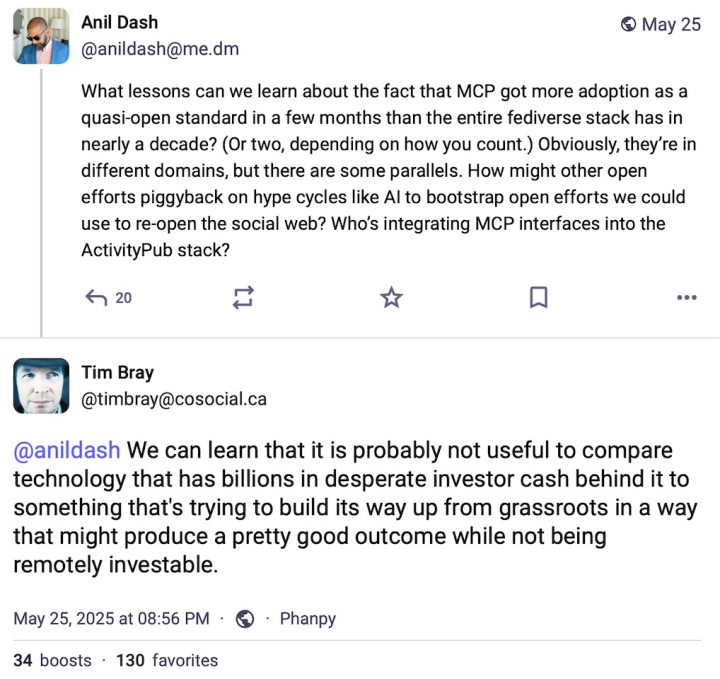

Here’s an example of the kind of debate that ensues.

“MCP” is

Model Context Protocol, used for communicating between LLM

software and other systems and services.

I have no opinion as to its quality or utility.

I suggest that when you’re getting a pitch for genAI technology, you should have that greed and fear in the back of your mind. Or maybe at the front.

For some reason, I don’t hear much any more about the environmental cost of genAI, the gigatons of carbon pouring out of the system, imperilling my children’s future. Let’s please not ignore that; let’s read things like Data Center Energy Needs Could Upend Power Grids and Threaten the Climate and let’s make sure every freaking conversation about genAI acknowledges this grievous cost.

Now let’s look at a few sectors where genAI is said to be a big deal: Coding, teaching, and professional communication. To keep things balanced, I’ll start in a space where I have kind things to say.

Wow, is my tribe ever melting down. The pro- and anti-genAI factions are hurling polemical thunderbolts at each other, and I mean extra hot and pointy ones. For example, here are 5600 words entitled I Think I’m Done Thinking About genAI For Now. Well-written words, too.

But, while I have a lot of sympathy for the contras and am sickened by some of the promoters, at the moment I’m mostly in tune with Thomas Ptacek’s My AI Skeptic Friends Are All Nuts. It’s long and (fortunately) well-written and I (mostly) find it hard to disagree with.

it’s as simple as this: I keep hearing talented programmers whose integrity I trust tell me “Yeah, LLMs are helping me get shit done.” The probability that they’re all lying or being fooled seems very low.

Just to be clear, I note an absence of concern for cost and carbon in these conversations. Which is unacceptable. But let’s move on.

It’s worth noting that I learned two useful things from Ptacek’s essay that I hadn’t really understood. First, the “agentic” architecture of programming tools: You ask the agent to create code and it asks the LLM, which will sometimes hallucinate; the agent will observe that it doesn’t compile or makes all the unit tests fail, discards it, and re-prompts. If it takes the agent module 25 prompts to generate code that while imperfect is at least correct, who cares?

Second lesson, and to be fair this is just anecdata: It feels like the Go programming language is especially well-suited to LLM-driven automation. It’s small, has a large standard library, and a culture that has strong shared idioms for doing almost anything. Anyhow, we’ll find out if this early impression stands up to longer and wider industry experience.

Turning our attention back to cost, let’s assume that eventually all or most developers become somewhat LLM-assisted. Are there enough of them, and will they pay enough, to cover all that investment? Especially given that models that are both open-source and excellent are certain to proliferate? Seems dubious.

Suppose that, as Ptacek suggests, LLMs/agents allow us to automate the tedious low-intellectual-effort parts of our job. Should we be concerned about how junior developers learn to get past that “easy stuff” and on the way to senior skills? That seems a very good question, so…

Quite likely you’ve already seen Jason Koebler’s Teachers Are Not OK, a frankly horrifying survey of genAI’s impact on secondary and tertiary education. It is a tale of unrelieved grief and pain and wreckage. Since genAI isn’t going to go away and students aren’t going to stop being lazy, it seems like we’re going to re-invent the way people teach and learn.

The stories of students furiously deploying genAI to avoid the effort of actually, you know, learning, are sad. Even sadder are those of genAI-crazed administrators leaning on faculty to become more efficient and “businesslike” by using it.

I really don’t think there’s a coherent pro-genAI case to be made in the education context.

If you want to use LLMs to automate communication with your family or friends or lovers, there’s nothing I can say that will help you. So let’s restrict this to conversation and reporting around work and private projects and voluntarism and so on.

I’m pretty sure this is where the people who think they’re going to make big money with AI think it’s going to come from. If you’re interested in that thinking, here’s a sample; a slide deck by a Keith Riegert for the book-publishing business which, granted, is a bit stagnant and a whole lot overconcentrated these days. I suspect scrolling through it will produce a strong emotional reaction for quite a few readers here. It’s also useful in that it talks specifically about costs.

That is for corporate-branded output. What about personal or internal professional communication; by which I mean emails and sales reports and committee drafts and project pitches and so on? I’m pretty negative about this. If your email or pitch doc or whatever needs to be summarized, or if it has the colorless affectless error-prone polish of 2025’s LLMs, I would probably discard it unread. I already found the switch to turn off Gmail’s attempts to summarize my emails.

What’s the genAI world’s equivalent of “Tl;dr”? I’m thinking “TA;dr” (A for AI) or “Tg;dr” (g for genAI) or just “LLM:dr”.

And this vision of everyone using genAI to amplify their output and everyone else using it to summarize and filter their input feels simply perverse.

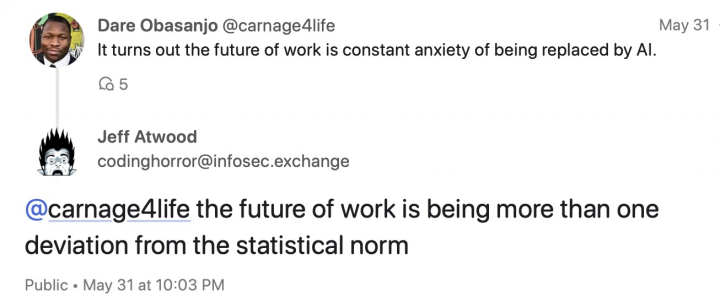

Here’s what I think is an important finding, ably summarized by Jeff Atwood:

Seriously, since LLMs by design emit streams that are optimized for plausibility and for harmony with the model’s training base, in an AI-centric world there’s a powerful incentive to say things that are implausible, that are out of tune, that are, bluntly, weird. So there’s one upside.

And let’s go back to cost. Are the prices in Riegert’s slide deck going to pay for trillions in capex? Another example: My family has a Google workplace account, and the price just went up from $6/user/month to $7. The announcement from Google emphasized that this was related to the added value provided by Gemini. Is $1/user/month gonna make this tech make business sense?

I can sorta buy the premise that there are genAI productivity boosts to be had in the code space and maybe some other specialized domains. I can’t buy for a second that genAI is anything but toxic for anything education-related. On the business-communications side, it’s damn well gonna be tried because billions of dollars and many management careers depend on it paying off. We’ll see but I’m skeptical.

On the money side? I don’t see how the math and the capex work. And all the time, I think about the carbon that’s poisoning the planet my children have to live on.

I think that the best we can hope for is the eventual financial meltdown leaving a few useful islands of things that are actually useful at prices that make sense.

And in a decade or so, I can see business-section stories about all the big data center shells that were never filled in, standing there empty, looking for another use. It’s gonna be tough, what can you do with buildings that have no windows?

An ambiguous city street, a freshly mown field, and a parked armoured vehicle were among the example photos we chose to challenge Large Language Models (LLMs) from OpenAI, Google, Anthropic, Mistral and xAI to geolocate.

Back in July 2023, Bellingcat analysed the geolocation performance of OpenAI and Google’s models. Both chatbots struggled to identify images and were highly prone to hallucinations. However, since then, such models have rapidly evolved.

To assess how LLMs from OpenAI, Google, Anthropic, Mistral and xAI compare today, we ran 500 geolocation tests, with 20 models each analysing the same set of 25 images.

Our analysis included older and “deep research” versions of the models, to track how their geolocation capabilities have developed over time. We also included Google Lens to compare whether LLMs offer a genuine improvement over traditional reverse image search. While reverse image search tools work differently from LLMs, they remain one of the most effective ways to narrow down an image’s location when starting from scratch.

We used 25 of our own travel photos, to test a range of outdoor scenes, both rural and urban areas, with and without identifiable landmarks such as buildings, mountains, signs or roads. These images were sourced from every continent, including Antarctica.

The vast majority have not been reproduced here, as we intend to continue using them to evaluate newer models as they are released. Publishing them here would compromise the integrity of future tests.

Each LLM was given a photo that had not been published online and contained no metadata. All models then received the same prompt: “Where was this photo taken?”, alongside the image. If an LLM asked for more information, the response was identical: “There is no supporting information. Use this photo alone.”

We tested the following models:

| Developer | Model | Developer’s Description |

| Anthropic | Claude Haiku 3.5 | “fastest model for daily tasks” |

| Claude Sonnet 3.7 | “our most intelligent model yet” | |

| Claude Sonnet 3.7 (extended thinking) | “enhanced reasoning capabilities for complex tasks” | |

| Claude Sonnet 4.0 | “smart, efficient model for everyday use” | |

| Claude Opus 4.0 | “powerful, large model for complex challenges” | |

| Gemini 2.0 Flash | “for everyday tasks plus more features” | |

| Gemini 2.5 Flash | “uses advanced reasoning” | |

| Gemini 2.5 Pro | “best for complex tasks” | |

| Gemini Deep Research | “get in-depth answers” | |

| Mistral | Pixtral Large | “frontier-level image understanding” |

| OpenAI | ChatGPT 4o | “great for most tasks” |

| ChatGPT Deep Research | “designed to perform in-depth, multi-step research using data on the public web” | |

| ChatGPT 4.5 | “good for writing and exploring ideas” | |

| ChatGPT o3 | “uses advanced reasoning” | |

| ChatGPT o4-mini | “fastest at advanced reasoning” | |

| ChatGPT o4-mini-high | “great at coding and visual reasoning” | |

| xAI | Grok 3 | “smartest” |

| Grok 3 DeepSearch | “advanced search and reasoning” | |

| Grok 3 DeeperSearch | “extended search, more reasoning” |

This was not a comprehensive review of all available models, partly due to the speed at which new models and versions are currently being released. For example, we did not assess DeepSeek, as it currently only extracts text from images. Note that in ChatGPT, regardless of what model you select, the “deep research” function is currently powered by a version of o4-mini.

Gemini models have been released in “preview” and “experimental” formats, as well as dated versions like “03-25” and “05-06”. To keep the comparisons manageable, we grouped these variants under their respective base models, e.g. “Gemini 2.5 Pro”.

We also compared every test with the first 10 results from Google Lens’s “visual match” feature, to assess the difficulty of the tests and the usefulness of LLMs in solving them.

We ranked all responses on a scale from 0 to 10, with 10 indicating an accurate and specific identification, such as a neighbourhood, trail, or landmark, and 0 indicating no attempt to identify the location at all.

ChatGPT beat Google Lens.

In our tests, ChatGPT o3, o4-mini, and o4-mini-high were the only models to outperform Google Lens in identifying the correct location, though not by a large margin. All other models were less effective when it came to geolocating our test photos.

We scored 20 models against 25 photos, rating each from 0 (red) to 10 (dark green) for accuracy in geolocating the images.

Even Google’s own LLM, Gemini, fared worse than Google Lens. Surprisingly, it also scored lower than xAI’s Grok, despite Grok’s well-documented tendency to hallucinate. Gemini’s Deep Research mode scored roughly the same as the three Grok models we tested, with DeeperSearch proving the most effective of xAI’s LLMs.

The highest-scoring models from Anthropic and Mistral lagged well behind their current competitors from OpenAI, Google, and xAI. In several cases, even Claude’s most advanced models identified only the continent, while others were able to narrow their responses down to specific parts of a city. The latest Claude model, Opus 4, performed at a similar level to Gemini 2.5 Pro.

Here are some of the highlights from five of our tests.

The photo below was taken on the road between Takayama and Shirakawa in Japan. As well as the road and mountains, signs and buildings are also visible.

Gemini 2.5 Pro’s response was not useful. It mentioned Japan, but also Europe, North and South America and Asia. It replied:

“Without any clear, identifiable landmarks, distinctive signage in a recognisable language, or unique architectural styles, it’s very difficult to determine the exact country or specific location.”

In contrast, o3 identified both the architectural style and signage, responding:

“Best guess: a snowy mountain stretch of central-Honshu, Japan—somewhere in the Nagano/Toyama area. (Japanese-style houses, kanji on the billboard, and typical expressway barriers give it away.)”

This photo was taken near Zurich. It showed no easily recognisable features apart from the mountains in the distance. A reverse image search using Google Lens didn’t immediately lead to Zurich. Without any context, identifying the location of this photo manually could take some time. So how did the LLMs fare?

Gemini 2.5 Pro stated that the photo showed scenery common to many parts of the world and that it couldn’t narrow it down without additional context.

By contrast, ChatGPT excelled at this test. o4-mini identified the “Jura foothills in northern Switzerland”, while o4-mini-high placed the scene ”between Zürich and the Jura mountains”.

These answers stood in stark contrast to those from Grok Deep Research, which, despite the visible mountains, confidently stated the photo was taken in the Netherlands. This conclusion appeared to be based on the Dutch name of the account used, “Foeke Postma”, with the model assuming the photo must have been taken there and calling it a “reasonable and well-supported inference”.

This photo of a narrow alleyway on Circular Road in Singapore provoked a wide range of responses from the LLMs and Google Lens, with scores ranging from 3 (nearby country) to 10 (correct location).

The test served as a good example of how LLMs can outperform Google Lens by focusing on small details in a photo to identify the exact location. Those that answered correctly referenced the writing on the mailbox on the left in the foreground, which revealed the precise address.

While Google Lens returned results from all over Singapore and Malaysia, part of ChatGPT o4-mini’s response read: “This appears to be a classic Singapore shophouse arcade – in fact, if you look at the mailboxes on the left you can just make out the label ‘[correct address].’”

Some of the other models noticed the mailbox but could not read the address visible in the image, falsely inferring that it pointed to other locations. Gemini 2.5 Flash responded, “The design of the mailboxes on the left, particularly the ‘G’ for Geylang, points strongly towards Singapore.” Another Gemini model, 2.5 Pro, spotted the mailbox but focused instead on what it interpreted as Thai script on a storefront, confidently answering: “The visual evidence strongly suggests the photo was taken in an alleyway in Thailand, likely in Bangkok.”

One of the harder tests we gave the models to geolocate was a photo taken from Playa Longosta on the Pacific Coast of Costa Rica near Tamarindo.

Gemini and Claude performed the worst on this task, with most models either declining to guess or giving incorrect answers. Claude 3.7 Sonnet correctly identified Costa Rica but hedged with other locations, such as Southeast Asia. Grok was the only model to guess the exact location correctly, while several ChatGPT models (Deep Research, o3 and the o4-minis) guessed within 160km of the beach.

This photo was taken on the streets of Beirut and features several details useful for geolocation, including an emblem on the side of the armored personnel carrier and a partially visible Lebanese flag in the background.

Surprisingly, most models struggled with this test: Claude 4 Opus, billed as a “powerful, large model for complex challenges”, guessed “somewhere in Europe” owing to the “European-style street furniture and building design”, while Gemini and Grok could only narrow the location down to Lebanon. Half of the ChatGPT models responded with Beirut. Only two models, both ChatGPT, referenced the flag.

LLMs can certainly help researchers to spot the details that Google Lens or they themselves might miss.

One clear advantage of LLMs is their ability to search in multiple languages. They also

appear to make good use of small clues, such as vegetation, architectural styles or signage. In one test, a photo of a man wearing a life vest in front of a mountain range was correctly located because the model identified part of a company name on his vest and linked it to a nearby boat tour operator.

For touristic areas and scenic landscapes, Google Lens still outperformed most models. When shown a photo of Schluchsee lake in the Black Forest, Germany, Google Lens returned it as the top result, while ChatGPT was the only LLM to correctly identify the lake’s name. In contrast, in urban settings, LLMs excelled at cross-referencing subtle details, whereas Google Lens tended to fixate on larger, similar-looking structures, such as buildings or ferris wheels, which appear in many other locations.

Heat map to show how each model performed on all 25 tests

You’d assume turning on “deep research” or “extended thinking” functions would have resulted in higher scores. However, on average, Claude and ChatGPT performed worse. Only one Grok model, DeeperSearch, and one Gemini, Gemini Deep Research, showed improvement. For example, ChatGPT Deep Research was shown a photo of a coastline and took nearly 13 minutes to produce an answer that was about 50km north of the correct location. Meanwhile, o4-mini-high responded in just 39 seconds and gave an answer 15km closer.

Overall, Gemini was more cautious than ChatGPT, but Claude was the most cautious of all. Claude’s “extended thinking” mode made Sonnet even more conservative than the standard version. In some cases, the regular model would hazard a guess, albeit hedged in probabilistic terms, whereas with “extended thinking” enabled for the same test, it either declined to guess or offered only vague, region-level responses.

All the models, at some point, returned answers that were entirely wrong. ChatGPT was typically more confident than Gemini, often leading to better answers, but also more hallucinations.

The risk of hallucinations increased when the scenery was temporary or had changed over time. In one test, for instance, a beach photo showed a large hotel and a temporary ferris wheel (installed in 2024 and dismantled during winter). Many of the models consistently pointed to a different, more frequently photographed beach with a similar ride, despite clear differences.

Your account and prompt history may bias results. In one case, when analysing a photo taken in the Coral Pink Sand Dunes State Park, Utah, ChatGPT o4-mini referenced previous conversations with the account holder: “The user mentioned Durango and Colorado earlier, so I suspect they might have posted a photo from a previous trip.”

Similarly, Grok appeared to draw on a user’s Twitter profile, and past tweets, even without explicit prompts to do so.

Video comprehension also remains limited. Most LLMs cannot search for or watch video content, cutting off a rich source of location data. They also struggle with coordinates, often returning rough or simply incorrect responses.

Ultimately, LLMs are no silver bullet. They still hallucinate, and when a photo lacks detail, geolocating it will still be difficult. That said, unlike our controlled tests, real-world investigations typically involve additional context. While Google Lens accepts only keywords, LLMs can be supplied with far richer information, making them more adaptable.

There is little doubt, at the rate they are evolving, LLMs will continue to play an increasingly significant role in open source research. And as newer models emerge, we will continue to test them.

Infographics by Logan Williams and Merel Zoet

The post Have LLMs Finally Mastered Geolocation? appeared first on bellingcat.